Real-time Bayesian Aggregation (RTBA)

Nuclear threat detection is a complicated and difficult task. There are numerous challenges to be overcome in the process: radioactive material sourced for nefarious purposes will likely be shielded to prevent detection, yielding a very weakly detectable signal easily drowned out by background radiation from anything from buildings, concrete, to bananas; transport routes, especially past the national border, are largely unknown and would likely be chosen to minimize exposure to avoid detection; and the placement and movement of sensors will largely dictate how effective a detection framework will be. See what you can make of these figures, then keep reading for some more details!

I had the privilege of working with and learning from excellent researchers, especially Dr. Prateek Tandon, in the Auton Lab at Carnegie Mellon University on how to overcome the challenge of low signal-to-noise (SNR) ratios, especially when you have some prior knowledge of the sources of interest and the expected background. A very powerful framework developed by the lab, and an excellent research thesis (Tandon, 2018), is called Bayesian aggregation.

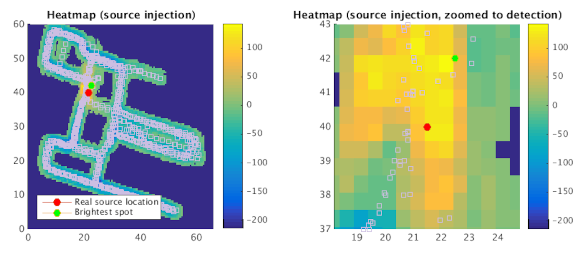

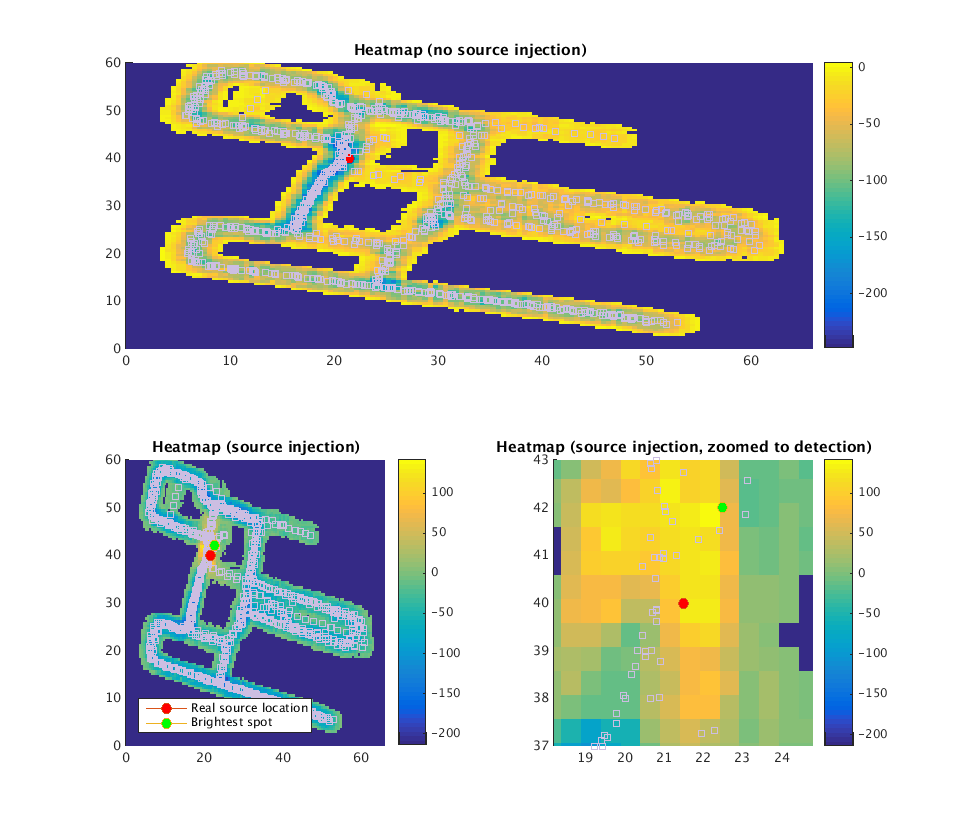

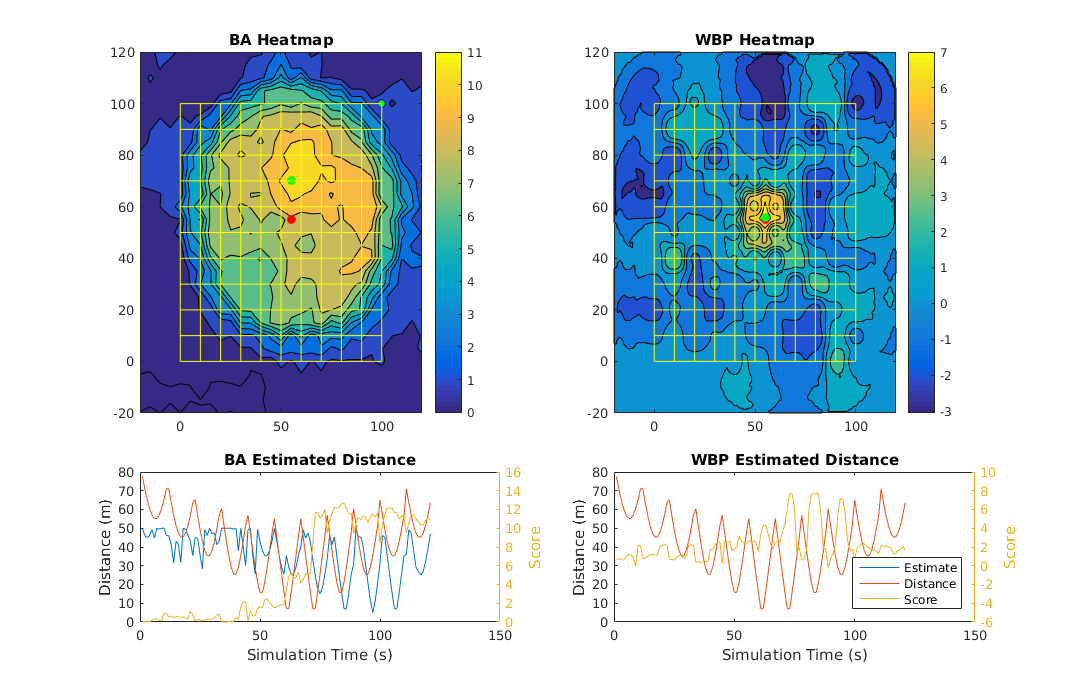

At a high level, the procedure is very simple: you collect samples in real-time, and update a probability map indicating the likelihood of a source present. Candidate hypotheses are incrementally updated by aggregating evidence of a source at any particular location given the current observation. Much of the magic of the process comes from the processes of filtering, background subtraction, and the Bayesian update itself. Using this approach, even very small signals can be localized with high precision. In figure 1 you can see BA in action, comparing the same trajectory with and without source injection. Without source injection, there’s no obvious evidence of a threat (note the heat map is showing log probabilities of a source detection, so very low values). With source injection, the entire map gets dark except for a high-probability bright spot at the point of the injected source. In figure 2 you can see how a model of the background radiation can be incorporated to increase the SNR and improve localization precision.

As one of my first roles in the lab, I used my background in real-time software development to design and implements C/C++ and Java modules employing the BA and filtering algorithms developed in-house for deployment. It was a very exciting learning experience and I was very happy to play a role in this endeavor.

If you want to learn more about this method, I highly recommend checking out Prateek’s thesis (Tandon, 2018) along with some additional works on BA out of the Auton Lab.